What is DNS-over-TLS and DNS-over-HTTPS

Subverting private DNS for ad blocking

Other uses for AdGuard DNS

Going further

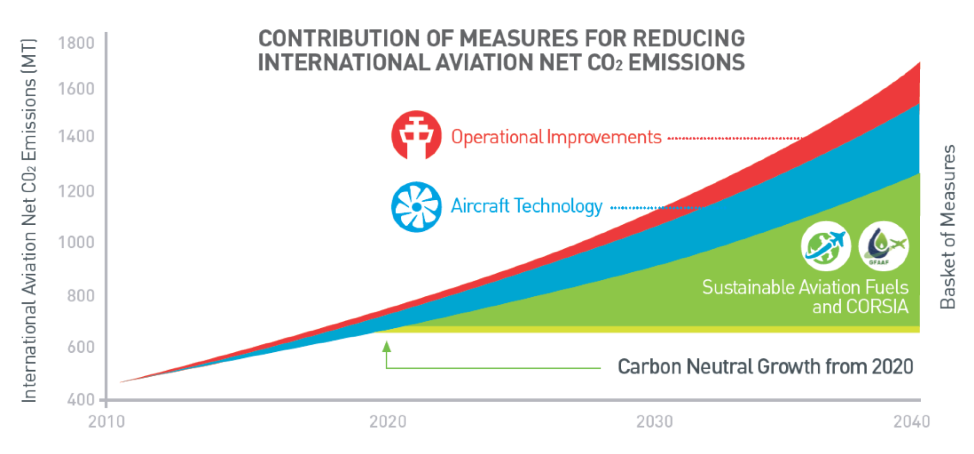

If you have to choose one year when you won't fly, this year, 2020, is the one to choose. Why? Because CORSIA.

CORSIA is not a novel virus, but "Carbon Offsetting and Reduction Scheme for International Aviation". In a nutshell, the aviation industry says they will freeze their co2 emissions from growing. Actually, aviation emissions are still going to grow. The airlines will just pay someone else to reduce emissions with the same amount aviation emissions rise - the "Offsetting" word in CORSIA. If that sounds like greenwashing, well it pretty much is. But that was expected. Getting every country and airline abroad CORSIA would not have been possible if the scheme would actually bite. So it's pretty much a joke.

The first phase of CORSIA will start next year, so the emissions are frozen to year 2020 levels. Due to certain recent events, lots of flights have already been cancelled - which means the reference year aviation emissions are already a lot less than the aviation industry was expecting. By avoiding flying this year, the aviation emissions are going to be frozen at an even lower level. This will increase cost of co2 offsetting for airlines, and the joke is going to be on them.

So consider skipping business travel and taking your holiday trip this year with something else than a plane. Wouldn't recommend a cruise ship, tho...

We need to turn tables around. If they want something impossible, it should be upto them to implement it.

It is simply unfair to require each online provider to implement an AI to detect copyright infringement, manage a database of copyrighted content and pay for the costs running it all.. ..And getting slapped with a lawsuit anyways, since copyrighted content is still slipping through.

The burden of implementing #uploadfilter should be on the copyright holder organizations. Implement as a SaaS. Youtube other web platforms call your API and pay $0.01 each time a pirate content is detected. On the other side, to ensure correctness of the filter, copyright holders have to pay any lost revenue, court costs and so on for each false positive.

Filtering uploads is still problematic. But it's now the copyright holders problem. Instead people blaming web companies for poor filters, it's the copyright holders now who have to answer to the public why their filters are rejecting content that doesn't belong to them.

This ignores the reality where majority of developers do cross-platform development every day. They develop on Mac and Windows PC's and deploy on Linux servers or mobile phones. The two biggest Linux success stories, cloud and Android, are built on cross-platform development. Yes, cross-platform development sucks. But it's just one of the many things that sucks in software development.

More importantly, the ship of "local dev enviroment" has long since sailed. Using Linus's other great innovation, git, developers push their code to a Microsoft server, which triggers a Rube Goldberg machine of software build, container assembly, unit tests, deployment to test environment and so on - all in cloud servers.

Yes, the ability to easily by a cheap whitebox PC from CompUSA was the important factor in making X86 dominate server space. But people get cheap servers from cloud now, and even that is getting out of fashion. Services like AWS lambda abstract the whole server away, and the instruction set becomes irrelevant. Which CPU and architecture will be used to run these "serverless" services is not going to depend on developers having Arm Linux Desktop PC's.

Of course there are still plenty of people like me who use Linux Desktop and run things locally. But in the big picture things are just going one way. The way where it gets easier to test things in your git-based CI loop rather than in local development setup.

But like Linus, I still do want to see an powerful PC-like Arm NUC or Laptop. One that could run mainline Linux kernel and offer a PC-like desktop experience. Not because ARM depends on it to succeed in server space (what it needs is out of scope for this blogpost) - but because PC's are useful in their own.

... processor : 7 BogoMIPS : 2.40 Features : fp asimd evtstrm aes pmull sha1 sha2 crc32 cpuid CPU implementer : 0x41 CPU architecture: 8 CPU variant : 0x0 CPU part : 0xd03 CPU revision : 3Or maybe like:

$ cat /proc/cpuinfo processor : 0 model name : ARMv7 Processor rev 2 (v7l) BogoMIPS : 50.00 Features : half thumb fastmult vfp edsp thumbee vfpv3 tls idiva idivt vfpd32 lpae CPU implementer : 0x56 CPU architecture: 7 CPU variant : 0x2 CPU part : 0x584 CPU revision : 2 ...The bits "CPU implementer" and "CPU part" could be mapped to human understandable strings. But the Kernel developers are heavily against the idea. Therefor, to the next idea: Parse in userspace. Turns out, there is a common tool almost everyone has installed does similar stuff. lscpu(1) from util-linux. So I proposed a patch to do ID mapping on arm/arm64 to util-linux, and it was accepted! So using lscpu from util-linux 2.32 (hopefully to be released soon) the above two systems look like:

Architecture: aarch64 Byte Order: Little Endian CPU(s): 8 On-line CPU(s) list: 0-7 Thread(s) per core: 1 Core(s) per socket: 4 Socket(s): 2 NUMA node(s): 1 Vendor ID: ARM Model: 3 Model name: Cortex-A53 Stepping: r0p3 CPU max MHz: 1200.0000 CPU min MHz: 208.0000 BogoMIPS: 2.40 L1d cache: unknown size L1i cache: unknown size L2 cache: unknown size NUMA node0 CPU(s): 0-7 Flags: fp asimd evtstrm aes pmull sha1 sha2 crc32 cpuidAnd

$ lscpu Architecture: armv7l Byte Order: Little Endian CPU(s): 4 On-line CPU(s) list: 0-3 Thread(s) per core: 1 Core(s) per socket: 4 Socket(s): 1 Vendor ID: Marvell Model: 2 Model name: PJ4B-MP Stepping: 0x2 CPU max MHz: 1333.0000 CPU min MHz: 666.5000 BogoMIPS: 50.00 Flags: half thumb fastmult vfp edsp thumbee vfpv3 tls idiva idivt vfpd32 lpaeAs we can see, lscpu is quite versatile and can show more information than just what is available in cpuinfo.

$ apt-cache search cross-build-essential crossbuild-essential-arm64 - Informational list of cross-build-essential packages for crossbuild-essential-armel - ... crossbuild-essential-armhf - ... crossbuild-essential-mipsel - ... crossbuild-essential-powerpc - ... crossbuild-essential-ppc64el - ... ⏎Lets have a quick exact steps guide. But first - while you can use do all this in your desktop PC rootfs, it is more wise to contain yourself. Fortunately, Debian comes with a container tool out of box:

sudo debootstrap stretch /var/lib/container/stretch http://deb.debian.org/debian echo "strech_cross" | sudo tee /var/lib/container/stretch/etc/debian_chroot sudo systemd-nspawn -D /var/lib/container/stretchThen we set up cross-building enviroment for arm64 inside the container:

# Tell dpkg we can install arm64 dpkg --add-architecture arm64 # Add src line to make "apt-get source" work echo "deb-src http://deb.debian.org/debian stretch main" >> /etc/apt/sources.list apt-get update # Install cross-compiler and other essential build tools apt install --no-install-recommends build-essential crossbuild-essential-arm64Now we have a nice build enviroment, lets choose something more complicated than the usual kernel/BusyBox to cross-build, qemu:

# Get qemu sources from debian apt-get source qemu cd qemu-* # New in stretch: build-dep works in unpacked source tree apt-get build-dep -a arm64 . # Cross-build Qemu for arm64 dpkg-buildpackage -aarm64 -j6 -bNow that works perfectly for Qemu. For other packages, challenges may appear. For example you may have to se "nocheck" flag to skip build-time unit tests. Or some of the build-dependencies may not be multiarch-enabled. So work continues :)